Penda’s Impact Validated by Two Independent Studies Showing Major Student Growth

Author:

Dr. Steven L. Miller,

Elite Performance Solutions

33,000 students,

13 independent

implementations

This meta-analysis synthesized 13 independent school-based data samples (N = 33,306 students) that compared the effects of the Penda Learning science platform with those of control cohorts, using random-effects modeling. Drawing data from multiple school districts, this analysis synthesized evidence from studies that meet the definition of Tier I–III evidence requirements outlined in the Every Student Succeeds Act (ESSA). A meta-analysis was then performed using a random-effects model to provide a statistical synthesis of multiple studies, thereby quantifying an overall effect size, increasing statistical power, resolving discrepancies between individual studies, and drawing more generalizable results. Additionally, we sought to identify factors that moderate the program's impact on science proficiency and broader community outcomes. Each school provided science assessment performance as measured by the Florida State Science Assessment (FSSA for Grades 5 and 8) or the NWEA MAP Science Assessment (4th through 8th grades).

Across all studies reporting Science assessments, the average standardized mean difference was Hedges’ g = 0.68 [95% CI: 0.54, 0.82], Z = 9.51, p < .001. The random-effects model found significant heterogeneity (Q (7) = 260.93, p < .001; I² = 96%), prompting moderator analyses to explore differential impacts across student groups and program fidelity conditions. An analysis to compare the mean effect (Hedge’s g) from schools using the outcome measure of the NWEA Map Science assessment or using the outcome of the Florida State Science Assessment (FSSA) showed average effect sizes g=0.58 [CI% 0.44, 0.73] and g=0.76 [CI% 0.50, 1.03], respectively. The test of the nullhypothesis that these effects were from the same group of effects (Z = 9.63, p < .001) was rejected.

Schools using the Florida State Science Assessment showed a Hedges’ g = 0.76 [95% CI: 0.50, 1.03], Z = 5.7, p < .001. This represents an estimated 28% gain over the control science instruction conditions, can be benchmarked as two-plus years of typical academic learning achieved within a single school year, and resulted in significantly higher passing rates on the FSSA. These effects are statistically rare within the field of education, exceeding the 99th percentile of program impacts reported in Kraft’s (2020) national database of over 700 intervention studies, where the median effect is 0.1 standard deviations.

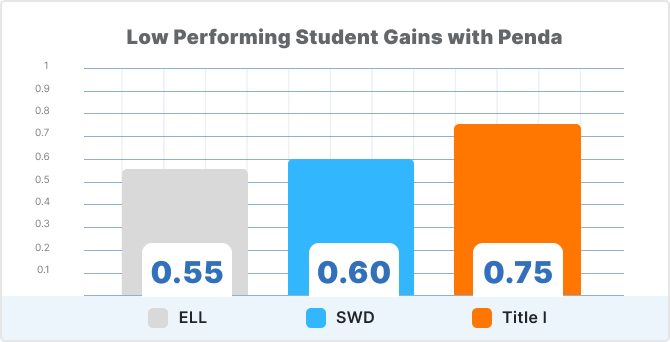

Further moderator testing revealed robust and positive effects for student subgroups: Title I (g = 0.73), students with disabilities (g = 0.52), and English language learners (g = 0.42). Notably, mastery-based engagement, defined as completing learning activities with an accuracy of≥80%, displayed a dose-response relationship, with low, moderate, and high mastery associated with effect sizes (g) of 0.56, 0.89, and 1.40, respectively. These findings underscore that the quality of engagement, rather than time-on-task alone, predicts the largest achievement gains on independent normed assessments. Together, these results indicate that Penda Learning is both practical and equitable, supporting student progress across demographic and need-based subgroups.

The policy relevance of these findings lies in their dual implications for educational equity and economic vitality. Penda Learning’s effect size surpasses both the What Works Clearinghouse benchmark for a “substantively important” result (≥0.25 sd) and the average gains achieved by most digital learning interventions (Kraft, 2020; 2023, and Bloom et al, 2008). The consistent subgroup effects across ELL, SWD, and Title I populations provide actionable evidence that technology-based mastery learning can help close achievement gaps that have persisted for decades. The magnitude of benefit for Florida schools, particularly the 20–25 percentage-point proficiency improvement observed in FSSA results, suggests that scalable, standards-aligned digital platforms can serve as powerful tools for advancing state ESSA goals and improving districtaccountability metrics.

Collectively, the evidence presented here positions Penda Learning as a promising and scalable science intervention that meets ESSA evidence standards, delivering both substantial educational value and measurable community benefits.

Author:

Dr. Steven L. Miller,

Elite Performance Solutions

46 Schools, 19,500 students,

Grades 3-12

NWEA Measures of Academic Progress (MAP) Science Assessment was the

measurement instrument from fall pre-test to spring post-test.

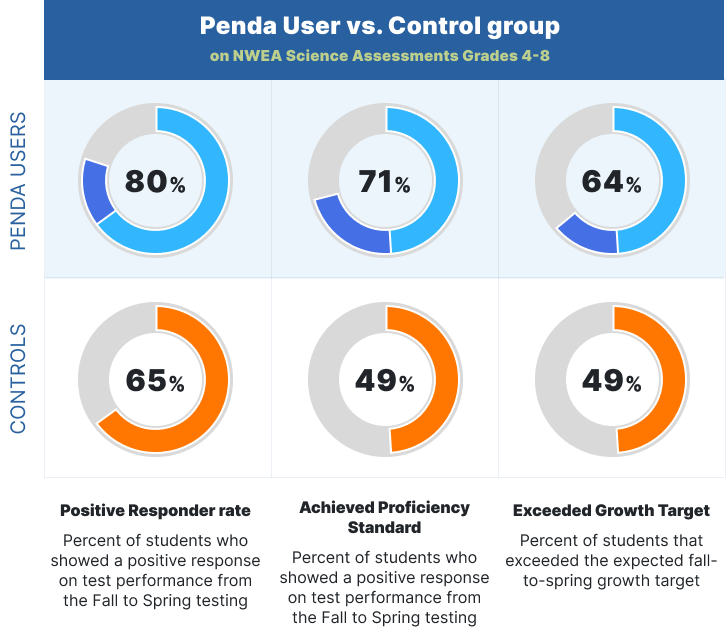

This quasi-experimental, multi-site study examined the impact of Penda Learning, a computer-based science instructional and intervention platform, on student science achievement across 46 schools, involving 19,500 students in Grades 3 through 12. The intervention group—classrooms implementing Penda Learning—was compared to a control group receiving business-as-usual science instruction in Grades 3 through 8 (n = 18,738). The platform was aligned with state science standards, curriculum, and instruction. Student performance was assessed using the NWEA Measures of Academic Progress (MAP) Science Assessment at both pre-test (Fall) and post-test (Spring). A repeated-measures MANCOVA was conducted using pre-test scores as a covariate. Results revealed statistically significant improvements in science achievement for students in the intervention group across all grade levels and demographic subgroups, with a dose-response relationship observed — greater usage was associated with higher learning gains.

Students in the intervention group showed 20% higher increases in estimated science proficiency rates compared to their peers in the control group. These findings suggest that computer-based instruction using Penda Learning produced meaningful gains in both psychometric growth and science proficiency, with implications for future educational equity, STEM workforce development, and economic mobility. This study meets Tier II (“moderate evidence”) standards of the Every Student Succeeds Act (ESSA) based on the U.S. Department of Education guidelines.

Participating schools provided student data in compliance with the Family Educational Rights and Privacy Act (FERPA), ensuring that all data collection, handling, and reporting procedures adhered to federal guidelines for the protection of student privacy.

Science proficiency in classrooms today changes the opportunities open to these students tomorrow and the economy around them.